- SANTA CLARA, CA—Over the last two years, the Oculus Rift has won the attention of virtual reality (VR) geeks, gamers, futurists, and Facebook. While you can currently buy Dev Kit 2 (DK2, shipping later this year), a consumer-ready product is expected for late 2014 or early 2015. To that end, Oculus VR has had to make a number of improvements to its head-mounted display (HMD) to ameliorate one very powerful side effect of wearing the Oculus Rift: nausea. As it turns out, putting a tiny screen very close to each eye and feeding it 3D-adjusted images is really tough on the brain. But in the DK2, Oculus has tried to address some of those issues, putting higher resolution screens in front of either eye, making adjustments to minimize latency, and adding an external camera to track head position.

- Outside the Augmented Reality World Expo last week, Ars met with SensoMotoric Instruments (SMI), a German company that has been building eye-tracking systems since 1991. Christian Villwock, director of Eye and Gaze Tracking Systems business for SMI, was keen to show us a demo of his company’s latest project—hacking eye-tracking technology into a DK1 Oculus Rift.But there's one feature that Oculus VR has left out of its developer models that many experts say desperately needs to be in a consumer-facing model: eye-tracking.

- Putting such a system in a HMD like the Oculus Rift would reduce the sickness that many people complain about and would improve 3D images, Villwock told Ars.

- Eye tracking, of course, is nothing new. SMI has spent the last 20 years developing tech for use in market research (to show where a consumer’s eyes are drawn on a display or a website) and for use by disabled people (giving the severely paralyzed a means of communication). Villwock also told Ars that SMI is working with a company in Utah to develop a “digital polygraph test” based on pupil dilation and gaze patterns.

- That’s nice, but let’s get back to the games

- SMI is now pitching to gaming OEMs, seeking to put its proprietary algorithms and pupil-detecting hardware into laptops, game consoles, and peripherals. SMI recently partnered with Sony’s Magic Lab, an internal department that the entertainment company uses to test out new technology. Together, the two companies developed a remote eye-tracking system for use in a demo of Infamous: Second Son at this year’s GDC. SMI would not comment on whether it was talking to Sony about putting its technology into Sony's recently announced Project Morpheus HMD for PS4.

- Before we got to the Oculus Rift demo, Villwock let me try a demo of the remote eye-tracking platform similar to the one Sony and SMI showed off earlier this year, in which a lightweight bar attaches to the bottom of a computer screen, calibrates to track your eyes using infrared beams, and then uses that data to execute movement and selection tasks within a game. (SMI used a demo game built-in Unity that it had put together.)

- The bar at the bottom of the screen uses IR sensors to track your gaze. Ideally, SMI said that such hardware could be built into screens at the OEM level.

- After calibrating the sensors by looking at a circle in the upper left and then in the lower right of the screen, I took the demo game for a spin. When I turned my head to the left, my point of view tracked to the left without having to touch the controller at all. I was then able to select a target and shoot it accurately just by looking at it and pulling the trigger—no aiming with the right thumbstick was necessary. Even when the target was off to the far left, or directly “beneath” me in the foreground, the shot was accurate.

- The GUI that lets you calibrate SMI's eye-tracking platform.

- Again, this technology is not particularly new—Swedish company Tobii showed Ars a demo of a very similar system in January 2013, during which Gaming Editor Kyle Orland used his eyes to destroy asteroids. But the implementation of eye-tracking technology has had to learn its boundaries. Back in 2013, Kyle wrote, "The new control method required a bit of concentration, and the game's lack of on-screen cues made it a little difficult to understand why the system didn't always register my focused gaze.” Villwock told Ars on Wednesday that SMI specifically tried to avoid making eye movements the sole input for its game demos, because it wants eye tracking in games to "integrate in a more natural way.”

- ”[There’s a] huge space of communications devices for disabled people... [where] you can only communicate with your eyes,” Villwock told Ars. But for people without those needs, "it doesn’t work; it’s a stupid thing because it hurts, and it causes cognitive load for you.”

- Instead, SMI is focusing on movement and selection functions in games, and it’s experimenting with making games more challenging and reactive to a player’s gaze by having enemies appear in your peripheral vision and then dart away when you look in their direction, for example. The company is also looking into supporting consumer-facing functions in computer programs that could change the text you’re looking at to make it easier to read. Or it could show you an automatic translation if you’re reading a foreign language, and it senses you’re stuck on a word.

- Getting closer to the eye sockets

- Having eye tracking hardware to augment a traditional gaming experience is cool, but as decades of video gaming have proved, it’s hardly necessary to the experience. However, the kind of eye-tracking technology that SMI put in its Oculus Rift may, in fact, be crucial to making HMDs consumer-ready.

- As mentioned above, motion sickness has been an issue with the Oculus Rift and other low-cost HMDs. There are many reasons for this: lag in the frame rate and a less-than-exceptional resolution can cause a feeling of nausea, as can displays that don’t respond to head position tracking. Oculus VR tried to fix these issues in the second version of its headset and was largely successful, as Ars writer Sam Machkovech wrote in March when he tried it at GDC 2014.

- But another big issue is that the screens in the Rift each project an image that, when working together, create a 3D image in the user’s brain. But if those projections are off by even a millimeter, the brain will only see 3D images that are warped, causing severe motion sickness.

- To get a really accurate projection, the HMD needs to know the interpupillary distance of the wearer’s eyes. In the Oculus Rift, the default distance is set at 64 mm, which is the average for most humans, but many people have wider-set eyes or more narrow-set eyes. That distortion becomes even more complex because as you move around in the virtual space, 3D objects will distort differently depending on how far apart your pupils are from 64 mm, as Oliver Kreylos, a VR researcher at UC Davis, describes in his excellent video below.

- On top of that, Kreylos explains that the distance between your pupils is not always constant, and human eyes will occasionally swivel inward to make the light from a perceived object precisely hit the high-resolution fovea on the eye's retina, depending on how virtually “far away” an object is. Kreylos explains that your eyes can “swivel in” if the screens are displaying something particularly close to you in the virtual space (like when you would bring a finger to your nose to go cross-eyed as a kid), and that can cause nausea without eye-tracking because the image projected by the Oculus will appear distorted as well.

- Projection properties of and scale issues with Oculus Rift. Check out Kreylos' follow-up video here.

- While there are ways to adjust the lenses and the firmware in the Oculus Rift to accommodate different interpupillary distances, the fixes are complex and definitely not consumer-ready. (Note that, because the Rift comes with lenses to make the images on the screen visible at such a close distance, what the screen displays has to be corrected to account for the lens. This makes interpupillary distance adjustments much more complicated.) Furthermore, you can’t really share the Rift with others if you have to spend time adjusting for different faces. Villwock said that SMI put automatic eye-tracking in the Rift so it will sense your eyes without even needing to calibrate, but calibration is available to increase the accuracy of the eye-tracking. “It’s a one-second thing to do calibration,” Villwock said. From there, the sensors around the lenses take constant measurements of where you're looking and change the image accordingly.

- As he put together the demo Oculus setup, Villwock explained that SMI had purchased the DK1 Oculus Rift on its own, and he stressed that his company was not in a partnership with Oculus on the project. In order to get the necessary hardware in, SMI sawed two tiny squares at the bottom of the screens inside the Rift, added an Infrared sensor in each of the squares, and then outfitted six LED lights around each screen. The "infrared camera and LEDs around the lenses are used to create a reflection point or six reflection points on the eye, and with those six reflection points we have self-learning algorithms to process it,” Villwock explained. SMI's system has the ability to track a user's eyes in 3D (even picking up retina details, Kreylos wrote), which should theoretically make it more accurate than a simple 2D pupil-tracking system, too.

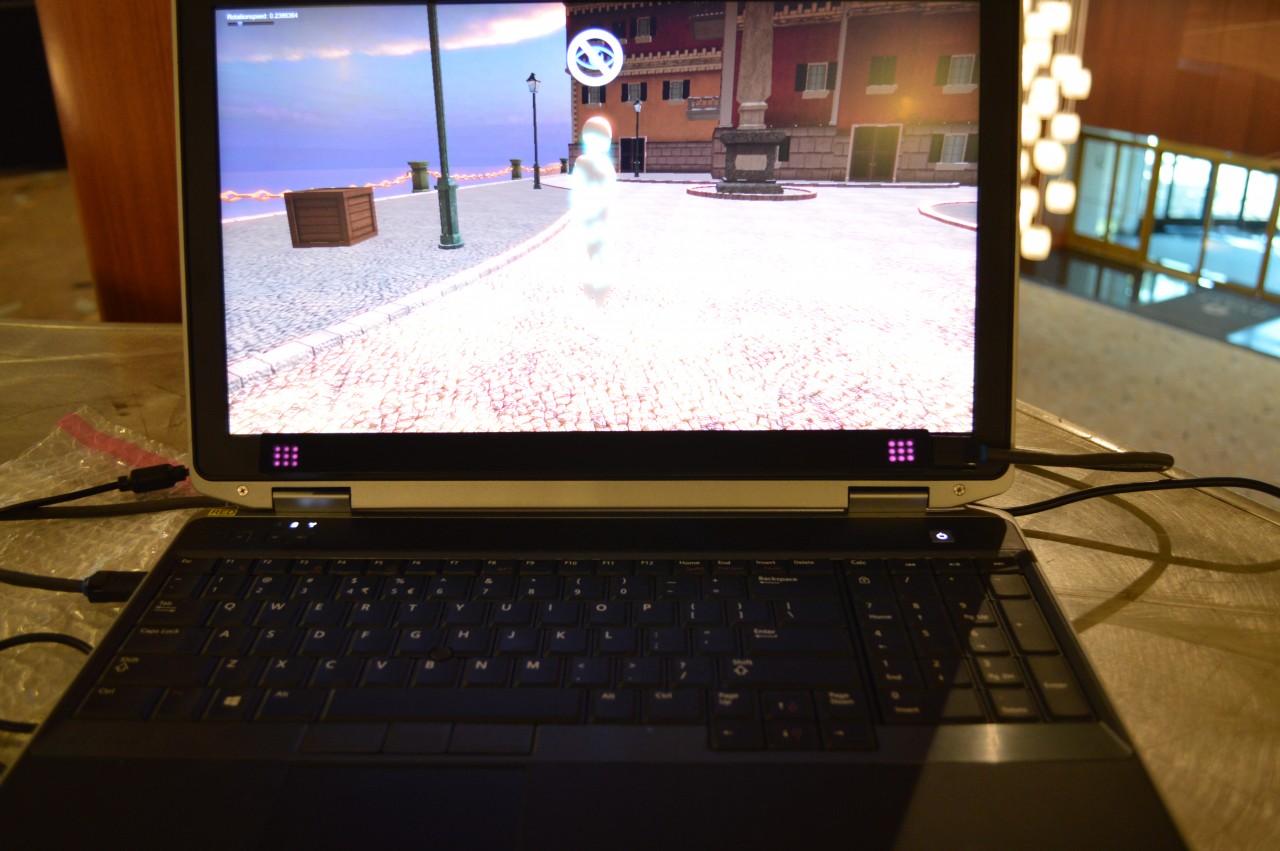

- Enlarge / Christian Villwock of SMI uses eye-tracking software in a demo.

- Megan Geuss

- Despite the hacked look of the lenses inside the Oculus Rift, Villwock told Ars that SMI already had a more elegant solution in the works that would not require large sawed-out squares in the bottom field of vision. “We are close to consumer-ready technology,” Villwock assured Ars, saying that SMI was mostly pitching HMD OEMs to carry its product but that it might consider making the tracking system a consumer add-on in the future.

- Kreylos, who has been researching low-cost HMDs for about six years, also had a chance to test SMI's Rift while the company was in California, and he told Ars in an e-mail that eye-tracking technology would be necessary for any mass-market HMD in the future. "Eye tracking is a must for consumer-facing HMDs, primarily for automatic calibration. Oculus didn't include it in the first and second development kit for price, complexity, and time reasons... The initial Rift VR developers and Kickstarter backers were gung-ho enough about VR to tolerate severe simulator sickness and even to build up resistance to it by exposing themselves voluntarily ('getting their VR legs'). 'Normal' people would not do that. As a result, there are loud rumors about Oculus already considering eye tracking for the consumer version of the Rift."

- Ars contacted Oculus VR about whether the consumer version of the Rift would indeed have eye-tracking, but we did not receive a response. We'll update if we do hear back on that.

- After his own demo, Kreylos wrote that he experienced lag and inaccuracy in SMI's gaze tracking before calibration, despite the fact that SMI's technology should work calibration-free. He suggested it could have been that he was wearing contacts, making his corneas less than ideal (I am also very near-sighted and use corrective lenses, so perhaps we suffered the same handicap) or that the inaccuracy could have been because SMI’s algorithms were processing 2D measurements of his eyes despite the fact that SMI incorporated 3D eye-tracking hardware into the headset (you can read more about that on his blog).Roughly measuring my interpupillary distance in the mirror, it appears that my eyes are a bit farther apart than average at about 66 mm, so I would be a prime candidate for eye-tracking in the Oculus Rift. In truth, when I tried the Rift with SMI’s eye-tracking, I still felt a little dizzy as I walked through a room filled with boxes, “selected” each one with my eyes, and then pulled a trigger to move or explode it. But all of the objects in the room looked completely normal, not slightly warped as might have been the case without eye-tracking. At first I was disappointed that I felt dizzy, but then I remembered that SMI had hacked this system onto a DK1 Rift, which had lag and resolution problems to begin with, not to mention a lack of head-position tracking and high image persistence. I chalked the dizziness up to the fact that I was awfully close to a 1280×800 display with a little too much lag.

- Still, Kreylos told Ars in an e-mail that without eye-tracking, HMDs are difficult to use for many people. "I believe, and I don't have hard data to back this up, that miscalibration is the next biggest cause of simulator sickness behind high latency. I wrote about that at some length in one of my first blog posts. I myself am very sensitive to miscalibration, probably because my eyes are significantly closer together than the population average (60 mm vs 63.5 mm). To me, an uncalibrated Oculus Rift makes virtual objects, including my own virtual body, appear about 25 percent smaller than [they are] in reality and squishes the depth dimension similarly. That means that the virtual world warps and wobbles when I move through it, which feels like being quite drunk and causes nausea. This disappears once I carefully calibrate the display, but that is a process that would be hard to replicate for end users, because it relies on indirect observations and a deep understanding of 3D projection. Proper 3D eye tracking, like that provided by SMI's system, would completely remove the need for such manual calibration; the HMD would 'just work.'"

- Despite my limited time with the eye-tracking Oculus Rift, I found it quite freeing to aim exclusively with my eyes in the small Unity game that SMI had thrown together for the demo. With low-cost eye-tracking technology like SMI's, we could be on our way to a future where HMDs are household items.

Why eye tracking could make VR displays like the Oculus Rift consumer-ready

![Why eye tracking could make VR displays like the Oculus Rift consumer-ready]() Reviewed by Anonymous

on

June 29, 2014

Rating:

Reviewed by Anonymous

on

June 29, 2014

Rating:

No comments: